Building NetworkOps: A 79,000-Line AI-Powered Network Automation Platform

Five months, 142 MCP tools, and the journey from "what if I could talk to my network?" to enterprise-grade automation

The Problem

Network engineers spend too much time translating intent into commands. We know what we want—"check if OSPF is healthy," "find what changed overnight," "safely push this config"—but we're forced to remember syntax, parse unstructured output, and manually correlate information across devices.

What if we could just ask?

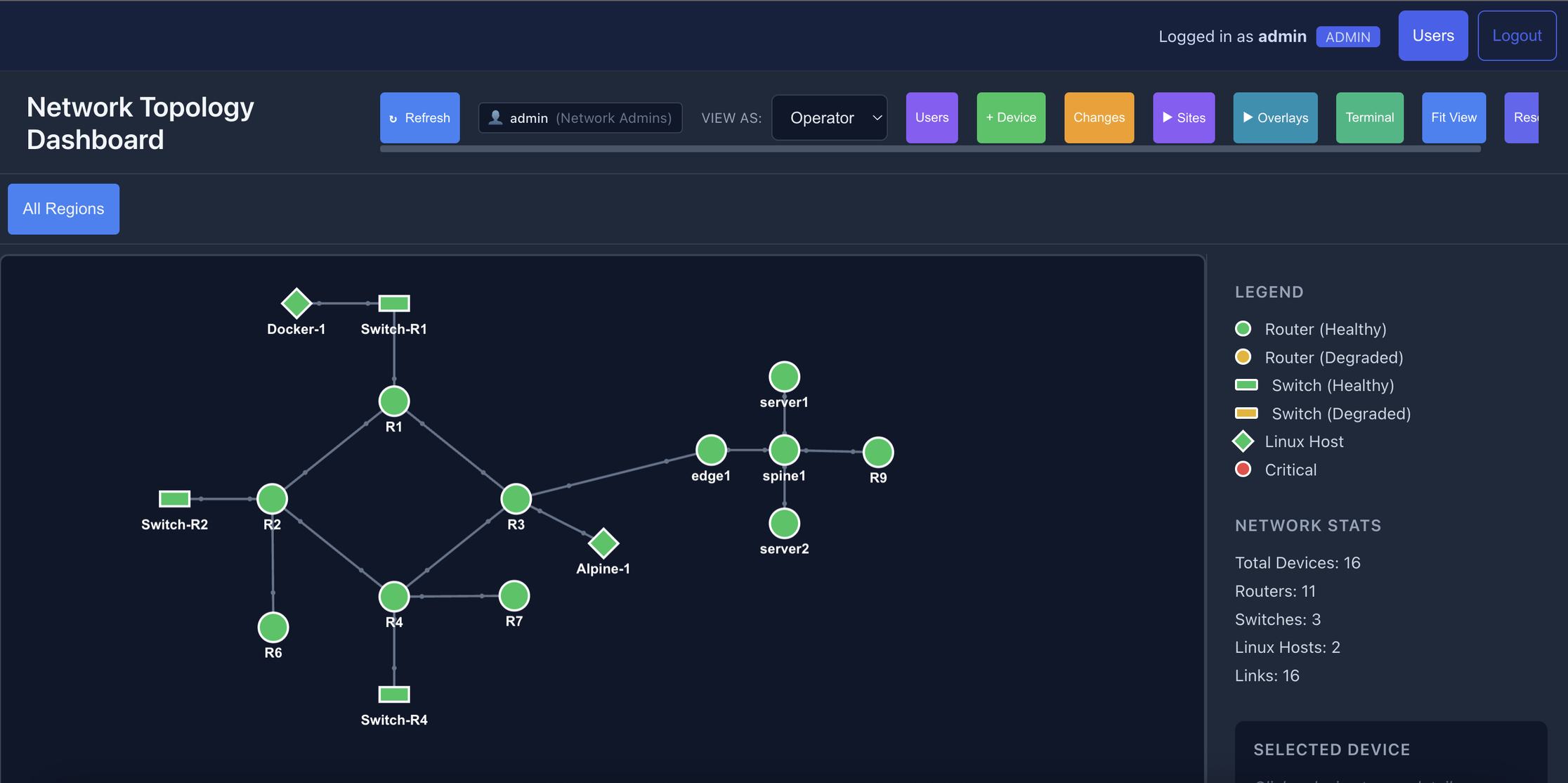

That question led me to build NetworkOps—an enterprise-grade network automation platform built on Anthropic's Model Context Protocol (MCP). Five months and 79,500 lines of Python later, it manages 16 lab devices across 4 vendors, provides 142 automation tools, and handles everything from real-time telemetry to pre-change impact analysis.

Here's how it came together.

What I Built: The Numbers

Total Python: 79,500 lines, MCP Tools: 142, API Endpoints: 35+, Core Modules: 45, Flask Blueprints, 16 Lab Devices (Cisco, Nokia, FRR, Linux), Development Time: 5 months.

Part 1: Foundation

(Month 1)

Why MCP Over REST

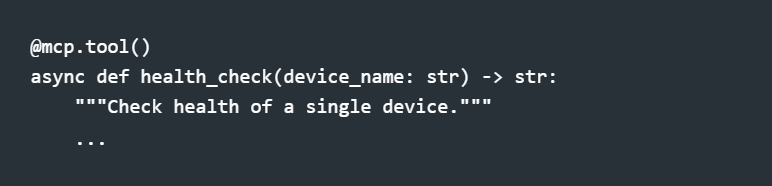

The Model Context Protocol offered something REST couldn't: tools as first-class citizens. Instead of teaching Claude to call /api/devices/R1/health, I could define health_check(device_name) and Claude would understand it natively—including parameter types, descriptions, and return values.

This decision shaped everything that followed. Tools became the API. Natural language became the interface.

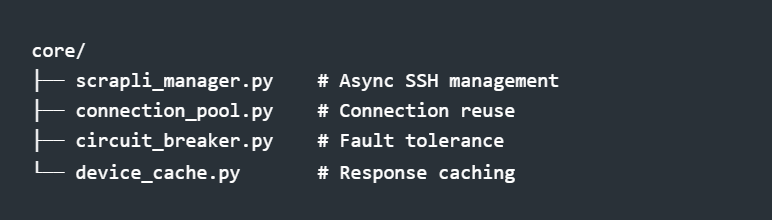

The Async Architecture

My first version used synchronous SSH. Checking 16 devices took over 90 seconds. Scrapli's async capabilities changed everything—parallel SSH connections brought health checks down to 8 seconds. But async introduced complexity: connection pooling, semaphores for rate limiting, graceful error handling when 3 of 16 devices are unreachable.

Part 2: The Tool Explosion (Month 2)

What started as 10 tools became 50, then 100, then 142. Each tool solved a real problem I encountered while managing the lab.

The 142 MCP tools span multiple categories: Device Operations (17 tools for commands, config, and health checks), Compliance (7 tools for golden config validation and scoring), Change Management (7 tools for pre/post validation with auto-rollback), Capacity Planning (12 tools for baselining and anomaly detection), Event Correlation (9 tools for incident grouping and root cause analysis), Orchestration (9 tools for Ansible and Nornir parallel execution), and Notifications (15 tools supporting Slack, Teams, Discord, and PagerDuty).

The Modular Refactor

By month 3, network_mcp_async.py had grown to 7,180 lines. I split it into 24 focused modules. The main file shrank to 88 lines—just imports and registration.

Part 3: The Dashboard (Months 2–3)

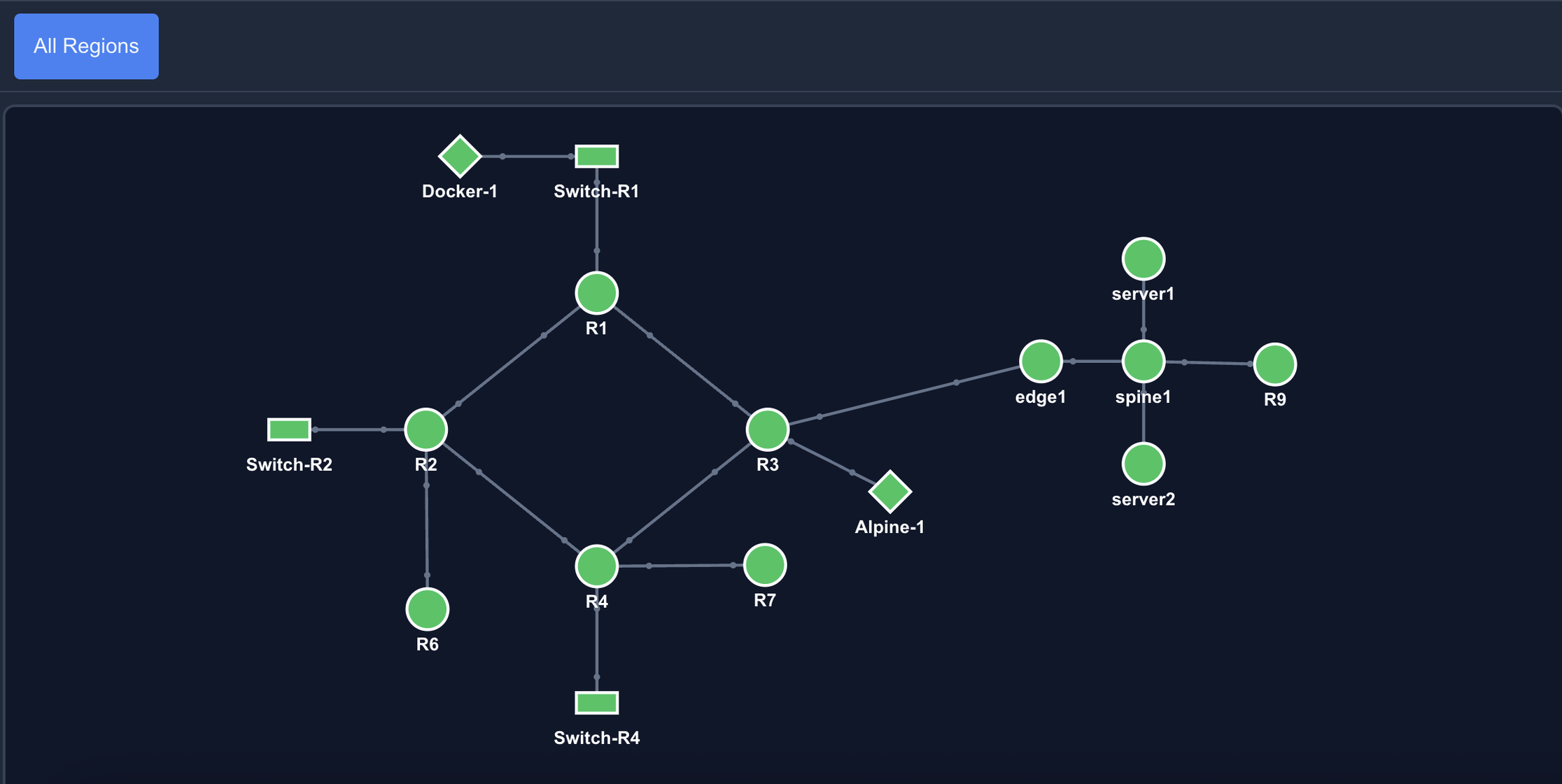

A CLI-only tool wouldn't work for a NOC. The dashboard runs React on port 3000, backed by a Flask REST API on port 5001.

Key features:

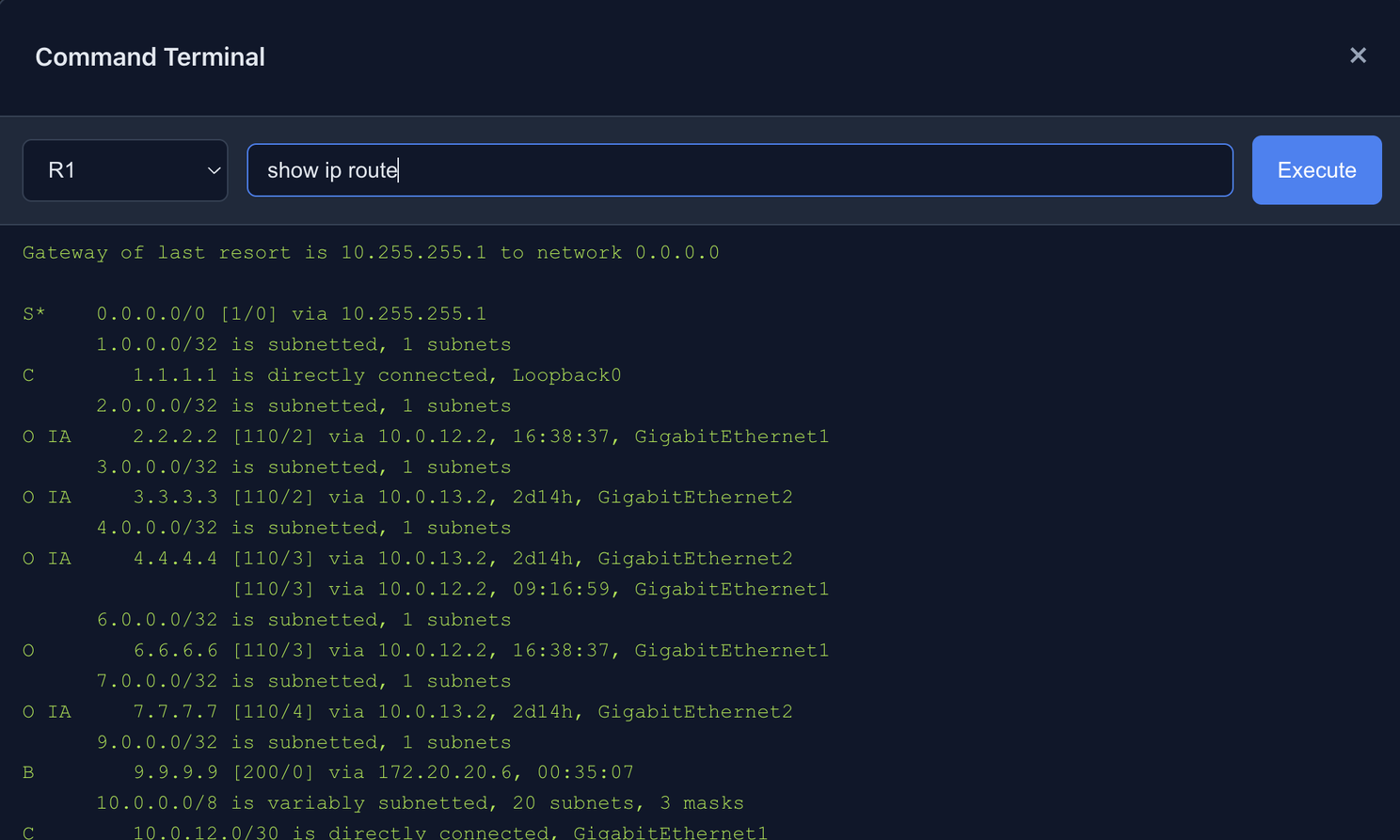

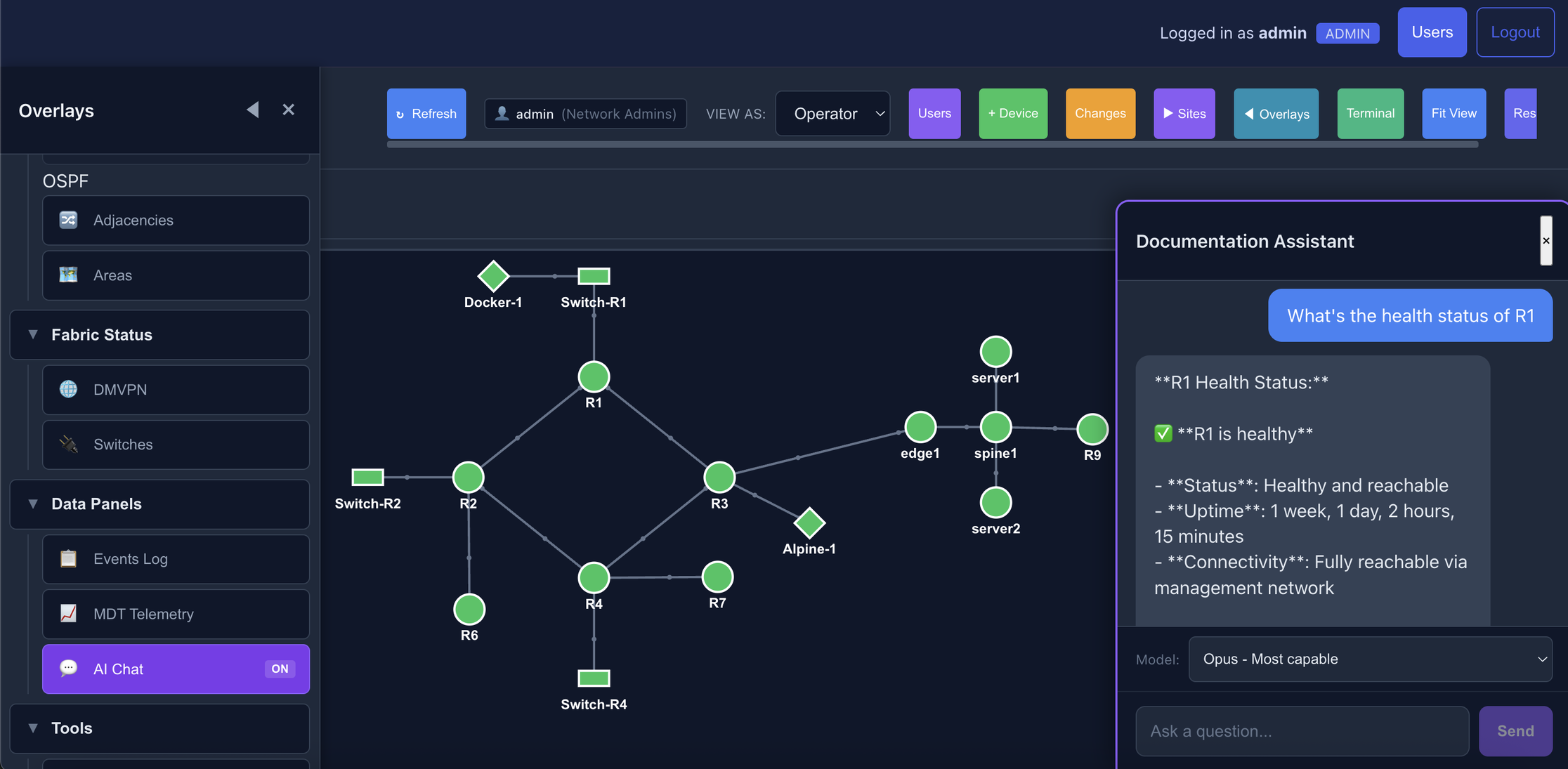

Force-directed topology map showing device health in real-time

Web terminal for interactive command execution

AI Chat panel with RAG-augmented responses

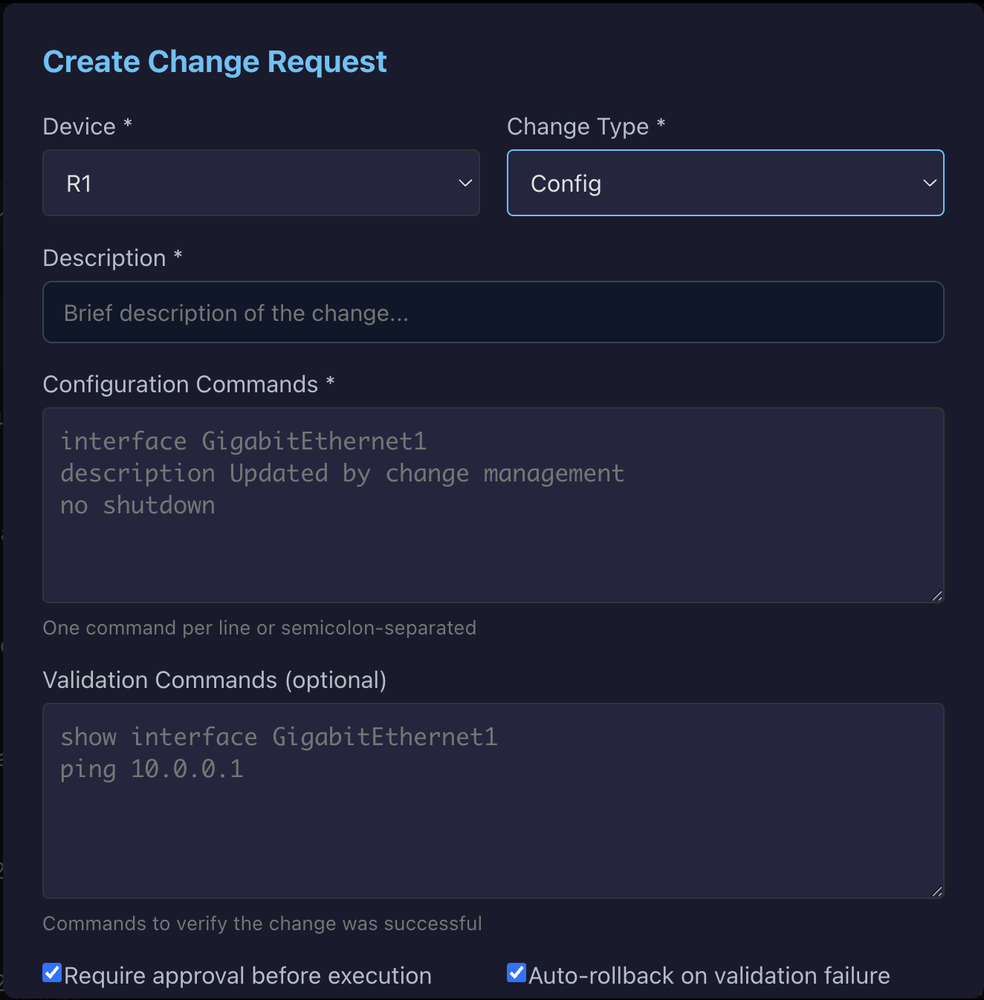

Change request workflow UI with approval gates

Part 4: Enterprise Authentication

(Month 3)

Production networks need access control. What started as basic JWT grew into 12 modules:

The auth system is split into focused modules: identity.py (user CRUD and authentication), tokens.py (JWT creation, validation, and blacklisting), passwords.py (bcrypt hashing and policy enforcement), permissions.py (RBAC with groups), mfa.py (TOTP-based two-factor authentication), and saml.py (SSO integration for enterprise identity providers).

Three roles with granular permissions: Admin (full access), Operator (config changes), Viewer (read-only).

Part 5: Multi-Vendor Support (Month 3)

My lab started Cisco-only. Production networks aren't. I added Containerlab for multi-vendor testing: FRRouting for BGP/OSPF edge cases, Nokia SR Linux for a different CLI paradigm, and Alpine Linux containers for traffic generation.

LLDP: Universal Discovery

CDP is Cisco-only. LLDP works everywhere. The core/lldp.py module supports 9 platforms: Cisco IOS-XE/IOS/NX-OS, Arista EOS, Juniper JunOS, Nokia SR Linux, Linux (lldpd), and HPE Aruba/ProCurve.

Part 6: The Intelligence Layer (Months 3–4)

Compliance Engine

The compliance engine validates device configs against golden templates, provides weighted scoring (critical violations count more), and generates auto-remediation commands ready to apply.

Change Management

Production changes need guardrails. The change workflow captures pre-state automatically, requires approval for production devices, runs post-validation checks, and auto-rolls back if validation fails.

Event Correlation

When R1's interface goes down, R2's OSPF neighbor loss and R3's BGP peer failure aren't three incidents—they're one. The event correlation engine groups related events, identifies root cause, and reduces alert fatigue.

Part 7: The Memory System (Month 4)

Claude doesn't remember previous conversations. The memory system fixes that. Every MCP tool call is logged with timestamp, parameters, device affected, and result summary. When Claude troubleshoots, it sees relevant history.

RAG System

Part 8: Observability

Model-Driven Telemetry

The MDT collector receives gRPC dial-out telemetry at 5-second intervals—interface stats stream directly to the dashboard via WebSocket. No polling required.

Traffic Baselining

The baselining system establishes normal patterns over 7 days, calculates statistical baselines (mean, std_dev, percentiles), and detects anomalies when current values exceed 3 standard deviations.

Lessons Learned

1. Async is non-negotiable. Network automation means waiting on devices. Async lets you wait on 16 simultaneously instead of sequentially.

2. Modularize at 1,000 lines. I waited until 7,000. The refactor was painful. Set a hard limit and stick to it.

3. Tools > Endpoints. health_check("R1") beats POST /api/health {"device": "R1"}. Design for conversation, not CRUD.

4. Impact analysis first. Know what will break before you build the rollback system.

5. Memory makes AI useful. Without memory, every conversation starts from zero. Context is everything.

What's Next

The roadmap includes gNMI streaming for multi-vendor telemetry, a Terraform provider for infrastructure-as-code, multi-tenancy for MSP support, and natural language config generation.

In December, I was invited by NetBox Labs to showcase the lab and demonstrate the platform's integration capabilities. I'm looking forward to that experience and exploring how NetworkOps can complement their ecosystem.

The Bottom Line

NetworkOps proves that AI-native network automation is practical today. Not as a demo—as a production system with enterprise authentication, pre-change impact analysis, automated rollback, multi-vendor support, real-time telemetry, and compliance scoring.

Five months. 79,500 lines. 142 tools. One question answered: Yes, you can talk to your network.

Elliot Conner builds AI-powered infrastructure automation.